- Samsung’s 12-layer HBM3E DRAM is the first of its kind, offering unmatched speed and capacity in AI memory chips.

- It outperforms previous versions by over 50% and reduces GPU usage, saving costs.

- It enhances AI training speed and delivers more AI user services.

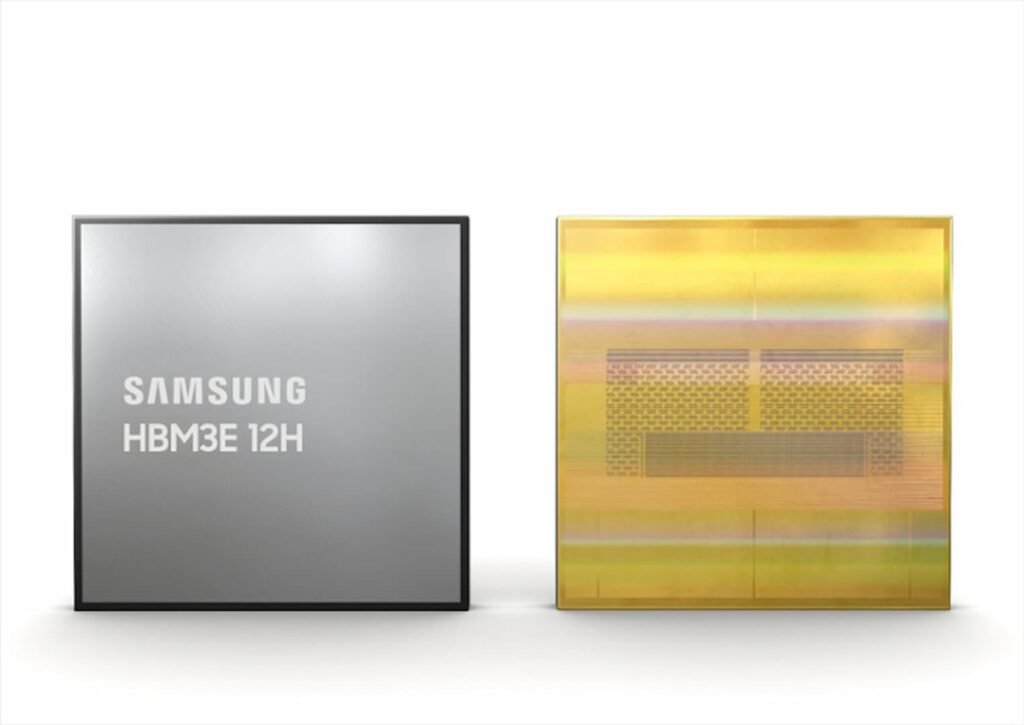

In a significant breakthrough, Samsung Electronics has unveiled the world’s first 12-layer High Bandwidth Memory 3E (HBM3E) DRAM. This revolutionary 36-gigabyte (GB) memory chip is set to redefine the landscape of Artificial Intelligence (AI) memory chips with its unparalleled data processing speed.

A New Era in Memory Chip Technology

HBM, a game-changing memory semiconductor, is an acronym for High Bandwidth Memory. It enhances data processing speed by vertically stacking multiple DRAMs, thereby widening the data pathway and increasing the capacity to process larger volumes of data simultaneously. The 12-layer HBM3E DRAM represents the fifth generation of HBM DRAM, marking a new era in memory chip technology.

Samsung’s 12-layer HBM3E DRAM boasts a maximum bandwidth of 1,280 GB per second and offers a 36 GB capacity, the highest among existing HBM chips. This development is not just a technological achievement; it’s a strategic move that positions Samsung to outpace its rival, SK Hynix, in the AI memory chip industry and secure a dominant position in the HBM market.

The Race for AI Memory Chip Dominance

As AI services continue to evolve, the demand for high-performance DRAM at data centres, which require rapid processing of large datasets, is surging. SK Hynix has been leading the market, holding a 50 per cent market share among memory chip makers last year. However, Samsung’s latest development could disrupt this market dynamic.

The competition in the AI memory chip market is set to intensify, with Samsung’s U.S. competitor, Micron, also announcing plans to begin mass-producing HBM3E. Meanwhile, SK Hynix started mass production of an eight-layer HBM3E earlier this year, which is expected to be integrated into Nvidia’s GPU and is scheduled for release in the second quarter.

The Future of AI Memory Chips

Samsung’s new HBM3E offers improved performance and capacity, surpassing the previous HBM3 version by more than 50 per cent. “The industry’s AI service providers are increasingly requiring HBM with higher capacity, and our new HBM3E 12H product has been designed to answer that need,” said Bae Yong-cheol, executive vice president of memory product planning at Samsung.

The company emphasized that this product would bring cost-saving effects to data centre companies that use a lot of AI memory chips. “The use of this product, which boasts increased performance and capacity, reduces the use of GPUs, allowing companies to save on total cost of ownership (TCO) and flexibly manage resources,” Samsung said.

For instance, when a server system utilizes HBM3E 12H, it is projected to enhance the average AI training speed by 34 per cent compared to HBM3 8H. Additionally, in the case of inference, it is anticipated to deliver up to 11.5 times more AI user services.

This innovation depicts that Samsung’s 12-layer HBM3E DRAM is not just a technological marvel; it’s a strategic move that could reshape the AI memory chip market and establish Samsung as a dominant player in the high-capacity HBM market during the AI era.